In computer science, asymptotic analysis describes how an algorithm scales with an input size that approaches infinity. There are three main types of notation: big-O, big-omega, and big-theta notation.

-notation is the upper bound on an algorithm’s runtime. -notation is the lower bound. -notation (which is a tight upper/lower bound limit) describes precisely how the algorithm grows. For an informal guide to finding big-O, see the page for time complexity.

Formal definition

We describe the running time of algorithms in terms of functions that maps either from/to the set of natural numbers or the set of reals. represents the bound on the number of operations that an algorithm takes. is the function we’re analysing, which can be more complicated than . The running-time function is denoted . If a function belongs to the set of functions (let’s use big-O), we use a notational abuse to say that .

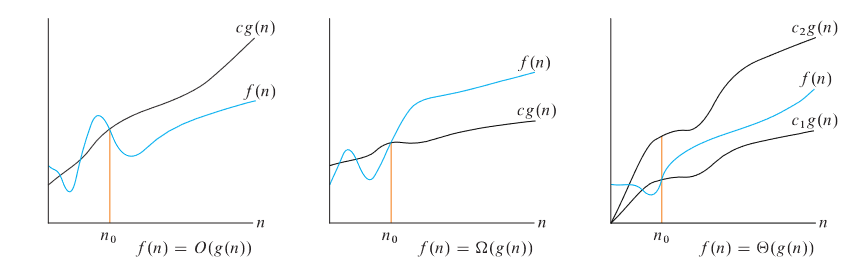

This figure gives some intuition on where comes into play.1 Essentially the value of must be on or below for values to the right of . We’re only interested in the long-term behaviour.

Big-O

For a given function , we denote as the set of functions:

i.e., belongs to if there exists a positive constant such that . This definition requires that any is asymptotically non-negative, i.e., for sufficiently large , must be non-negative.

We also define little-O , which may not be an asymptotically tight upper bound.

Big-Ω

We denote as the set of functions:

i.e., an asymptotic lower bound.

We also define little-omega , which may not be an asymptotically tight lower bound.

Big-Θ

We denote as the set of functions:

i.e., it was an asymptotically tight bound. By theorem, we have if and only if .

It’s usually a good idea to find by proving big-O and big-Ω.

Properties

- If and , then: .

- if and only if .

- if and only if .

- if and only if .

- .

- if and only if .

Then, let and , where the prime denotes an arbitrary function (not the derivative), then:

Computations

Definitionally, we can prove the asymptotic bounds for functions, by making use of algebraic manipulations. This can be a little cryptic sometimes:

- For some compound expression, try to move out of the parentheses.

We can also easily compare the asymptotic bounds of multiple functions with the limit method and with L’Hôpital’s rule.

The logarithmic limit method supposes:

Then, if we take the logarithm:

Practically, how do we use this? At step two, because we have a fraction, we expand out the logarithm to an algebraic expression with in it. We take its result as the exponent for base 2. For , we get . For , we get .

Footnotes

-

From CLRS’ Introduction to Algorithms. ↩