In operating system design, page tables are how physical memory is converted into virtual memory. We divide physical memory into fixed-size pages (usually 4096 bytes). The MMU maps frames in physical memory with pages in virtual memory. Each page is aligned, such that when the lower 12 bits are zero, pages will start.

Anatomy

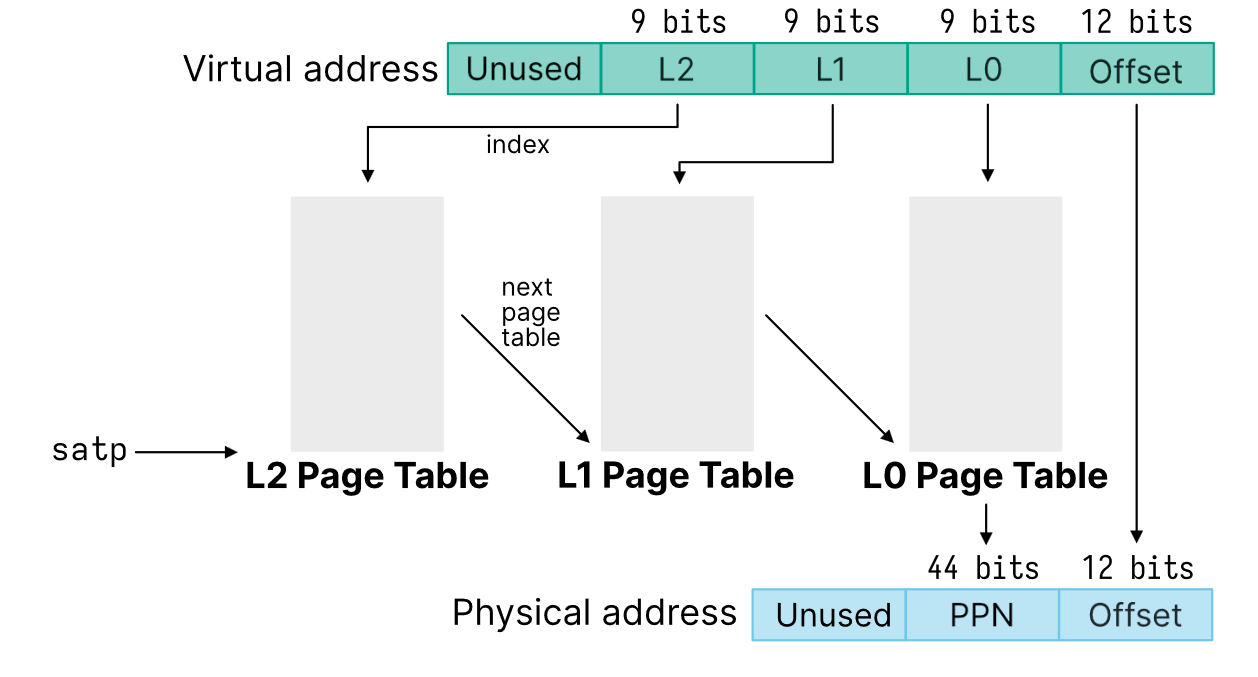

The page table stores address translation for each pages’ location. A few key determinants (assuming a 64-bit system, 39-bit addressing scheme (as in RISC-V)) are:

- Offset bits (12 least significant bits) — used to offset within the physical address.

- Index bits (27 bits after) — maps into the page table, which maps to a specific location in the physical address (44 bits), i.e., to the VPN (virtual page number).

- For multi-level pages, we can use 9, 9, 9 for L2, L1, L0 pages. We iteratively traverse the linked pages. We start with L2, get a pointer to an L1 page table, offset this with our 9 bits, then get a pointer to an L0 page table.

- There are also unused bits, which aren’t used in mapping.

Within the page table, we have a few flags as well:

- Valid bit (

0) — a flag for the MMU to check if address translation should continue. - Readable (

1), writable (2), executable (3) — denotes the permission at the memory address. - Dirty bit (

7) - RSW, reserved for supervisor use (

9to8) - Physical page number (

53to10) — maps the physical memory frame. - And reserved bits that aren’t used (

63to54).

Page tables

With a single level page table, we have to map a significant number of addresses. If each page table entry takes a certain number of bytes, then the page table takes an enormous amount of space in physical memory per process. For a single page table:

Multiple levels address this space — where we map another page table to another. Just like cache design, we have multiple levels (L1, L2, L3). The closest page table on the hierarchy to physical memory is the lowest level (L0).

Each level is mapped to by 9 bits in the virtual address.1 Each entry in the higher level page tables tells us which lower level page table to look at. The L0 table tells us which physical page we map to.

This allows us to use much smaller page tables, so that each page table (each level) fits on a single page. On x86-64 systems, each page table is 4 kB. Assuming 3 bytes per PTE (), this means each page table has entries (byte addressable). With multilevel decoding, this makes address translation slower (time versus space trade-off). To resolve this, we use a translation look-aside buffer.

This allows us to use much smaller page tables, so that each page table (each level) fits on a single page. On x86-64 systems, each page table is 4 kB. Assuming 3 bytes per PTE (), this means each page table has entries (byte addressable). With multilevel decoding, this makes address translation slower (time versus space trade-off). To resolve this, we use a translation look-aside buffer.

The multilevel page tables still support 512 GB. If each entry in the L2 table is filled, and each entry in each L1 table is filled, and each entry in each L0 table is filled, then we have entries, like before. However, in practice, not every entry is filled and we only support a proportional amount of addresses to how much the tables are filled. So if the page tables are only filled 10% (pretty common situation, since few machines have 512 GB of RAM), we only use that much space.

If we have one address, we only need (at minimum) an L0, L1, L2 page table. That’s it!

Given physical pages, the OS maintains a linked list of free pages. The unused pages contain the next pointer in the free list (so unused memory points to more unused memory). Then, to allocate and de-allocate a page, we remove and add from the free list.

The number of page table levels required is given by:

The virtual bit count is how many bits the virtual addresses take up, Offset bits are defined by how large the pages are. Index bits are given by the number of PTEs.

Systems programming

On x86-64, processes use a register satp to set the root page table. Each process has its own root page table. This is managed by a special CPU register that tells the MMU which page table in physical memory should be used. On POSIX systems, we use sbrk to allocate memory. This call grows or shrinks the heap. In the grow case, it grabs pages from the free list.

The kernel will initialise the process’ address space. The stack can only grow downwards, so there’s a “guard page” that is known to be invalid. Then, if we ever access this, we know we get a stack overflow.

Exam questions

Say we have an 8-bit virtual address, 10-bit physical address, and page size of 64 bytes.

-

Pages are bytes, so we have 6 offset bits.

-

2 bits are used for the virtual page number.

- Then, there are virtual pages.

-

4 bits are used for the physical page number.

- And physical pages.

-

There are 6 entries in the page table.

-

If we translate a virtual address, we take the MSB 2 bits as the page table number. The rest is the offset, which shows up in the physical address.

-

store address translation for each page’ location

-

kinda similar to cache design

- offsets, frame number

- valid bit

- protection bit

- present bit (memory versus disc)

- reference bit

- dirty bit

-

no external fragmentation

-

may lead to slow machine

- translation lookaside buffer in MMU

-

swap space pages out of memory and into it

-

etc

-

disk address of a given page

Footnotes

-

From Prof Eyolfson’s lecture slides. ↩