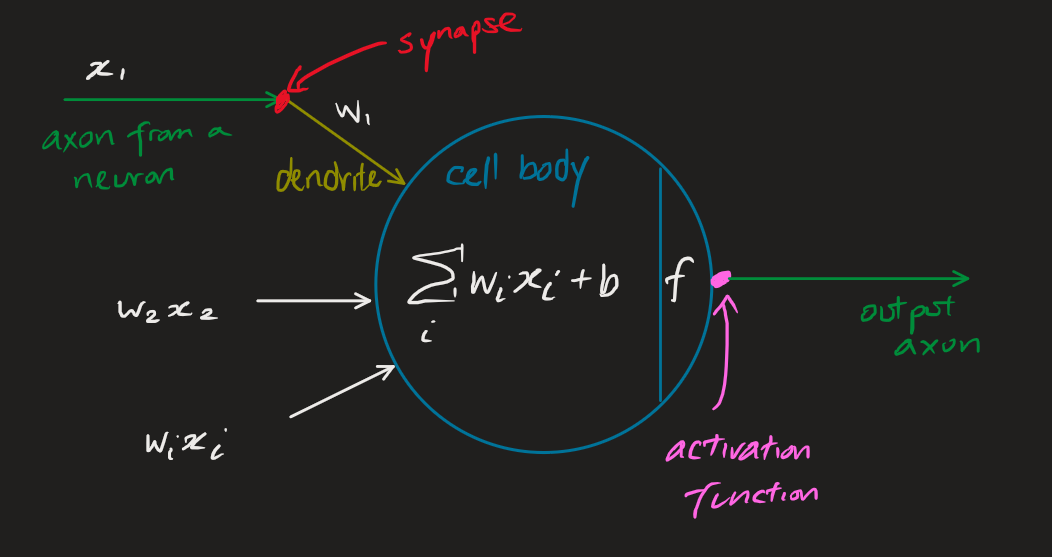

A neuron is a cell that is electrically excitable. A biological neural network is made up of several complicated parts. We approximate it with three different main parts: dendrites receive information from other neurons, a cell body consolidates information from the dendrites, and an axon passes information to other neurons.

A synapse is the area where the axon of one neuron and the dendrite of another connect. Neurons are connected together and pass electrical signals in broader neural networks. They fire on different stimuli.

In artificial neural networks, we use a mathematical model to approximate a neuron:

- is an input, such as a pixel in an image.

- The number of inputs is described in an -dimensional vector.

- is the weight for input that we learn for this particular input.

- This is also described as an -dimensional vector. What this means is that we can represent the result of the multiply/accumulate operation as a vector multiplication.

- is the bias, a weight that we learn with no input, i.e., where the input is equal to 1.

- is the activation function that determines how our output changes with the sum of all weight-input products.

- is the output, such as what a given image describes.

- i.e.,

Implications

Some interview questions:

- Can we remove a neuron from a neural network?

- Depends on the architecture. For an MLP, we can. The extra neuron provides extra non-linearity in the layer. Removing it reduces the non-linearity and hence performance of the network.

- For an RNN, we can’t. This is because each neuron is sequential.