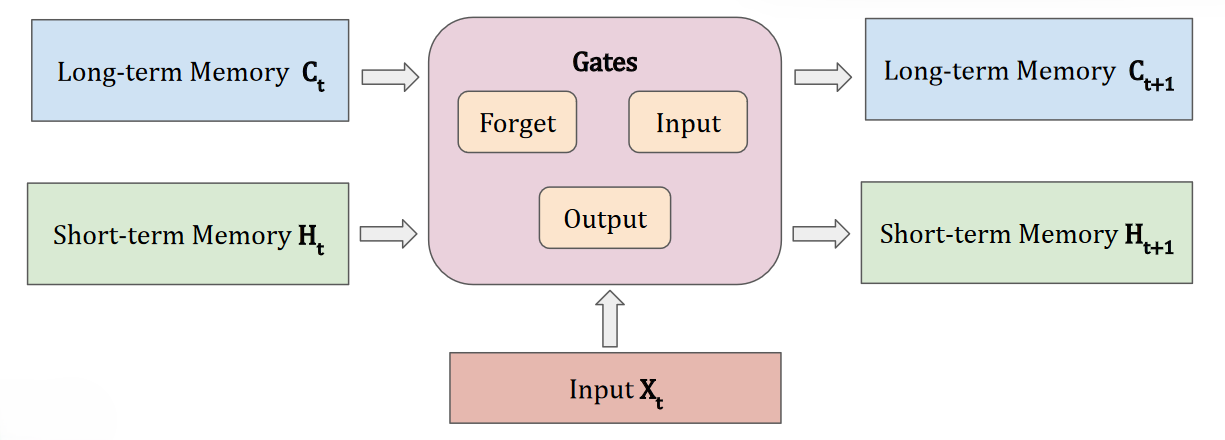

LSTMs (long short-term memory) are an architectural variation on recurrent neural networks that consist of a long-term memory (cell state) and a short-term memory (context or hidden state). There are three gates used to update the memories: a forget, input, and output.

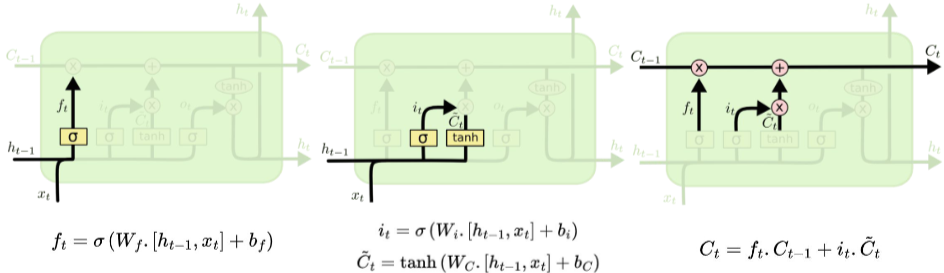

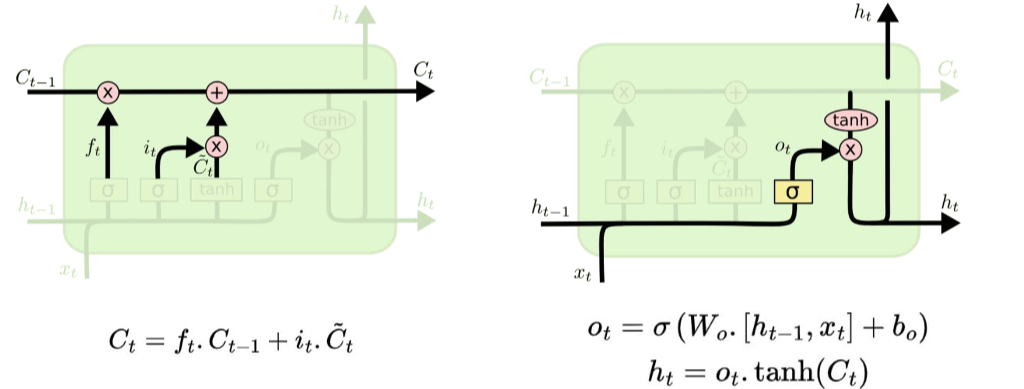

The forget gate (long-term memory) figures out how much of the past memory we should forget. The input gate sets how much the current input should contribute to the memory. The updated long-term memory is the amount of past that is remembered (decided by the forget gate) combined with the memory that was just created (decided by the input gate). The output gate (short-term memory) sets how much of the updated long-term memory should construct the short-term memory.

The forget gate (long-term memory) figures out how much of the past memory we should forget. The input gate sets how much the current input should contribute to the memory. The updated long-term memory is the amount of past that is remembered (decided by the forget gate) combined with the memory that was just created (decided by the input gate). The output gate (short-term memory) sets how much of the updated long-term memory should construct the short-term memory.