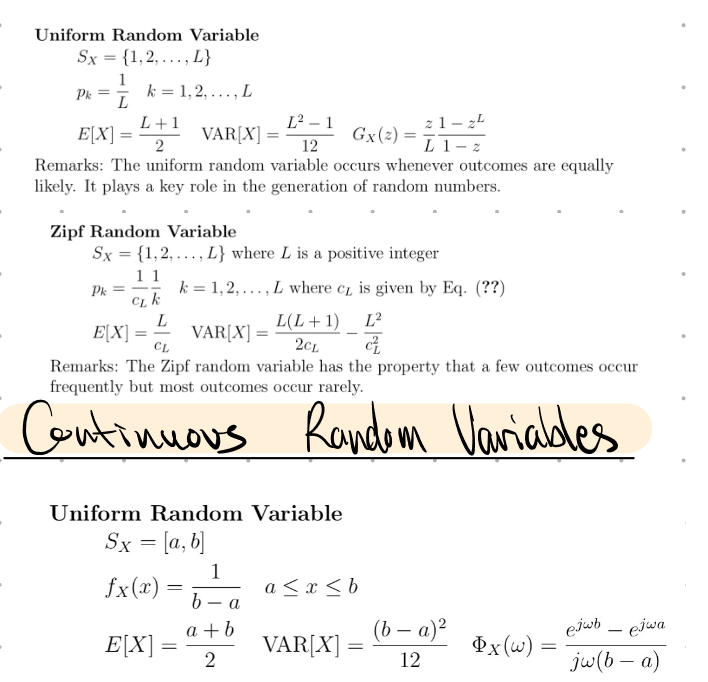

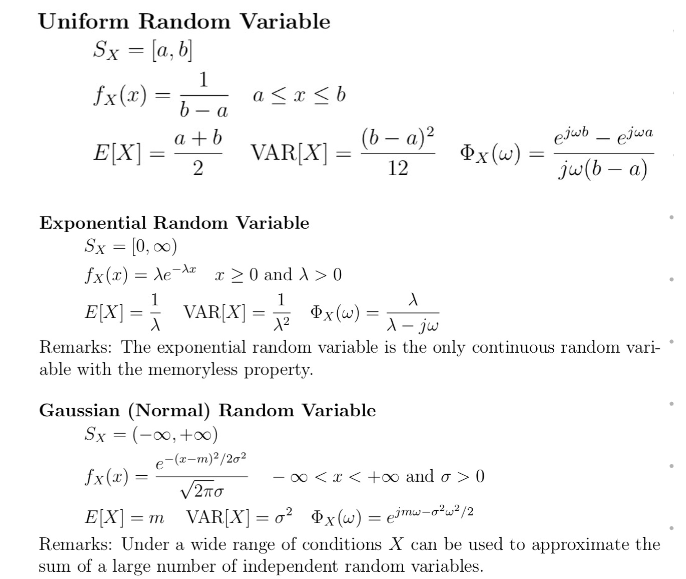

In probability theory, a random variable (RV) assigns a numerical value to each outcome in a sample space. A random variable is discrete if its possible values form a discrete set. Continuous random variables contains an interval where all points exist, i.e., its probabilities are given by areas under a curve.

We denote the sample space as the domain of the random variable, and the set as the range of the random variable. Then, the way the random variable is distributed is called a probability distribution.

Functions

For a random variable , and a real-valued function , we define as the function evaluated at the random variable’s value. too is a random variable.

The probability of an event (involving ) is equal to the probability of the equivalent event of values of such that is in :

We can infer from this that:

- The event is used to find the CDF of directly.

- The event is used to find the PDF of .

We obtain the PDF of by differentiating with respect to the input of (usually ). Our differentiation usually has an term within it.

Multiple random variables

Discrete

The joint PMF for discrete RVs is given by:

And as usual, the sum of all the joint PMFs is 1:

The marginal PMF of is defined as if we only take one of these sums:

Continuous

The joint CDF for continuous RVs is given by:

The marginal CDF is similarly defined as the marginal PMF:

The joint PDF can be obtained from the joint CDF by differentiation:

The marginal PDF is given by: