In concurrent programming, a mutex (or just a lock) is a way to prevent variable/object/structure state from being modified by other threads. We use locks to make multiple instruction operations appear as if they’re taking place atomically.

Their general use case is as follows: if there’s code in a critical section (i.e., multiple threads may access it), we try to lock the variable.

- If no thread holds the variable, we acquire the lock and enter the critical code.

- If a thread already holds the variable, we wait for the lock to be released before continuing. Thus, only one thread can enter critical code at a time.

- Then we unlock the code.

Deadlocks occur when multiple threads get stuck waiting for the critical section to be unlocked. There are a few key requirements for mutexes:

- Mutual exclusion (obviously)

- Liveness (or progress) — one thread must proceed, the rest must be queued. If it depends on outside threads, it’ll likely deadlock.

- Bounded waiting — if a thread reaches a critical section, it has to proceed eventually.

Implementation

Peterson’s algorithm and Lamport’s bakery algorithm give us ways to implement mutexes in software. However, modern CPUs execute code out-of-order so these aren’t viable.

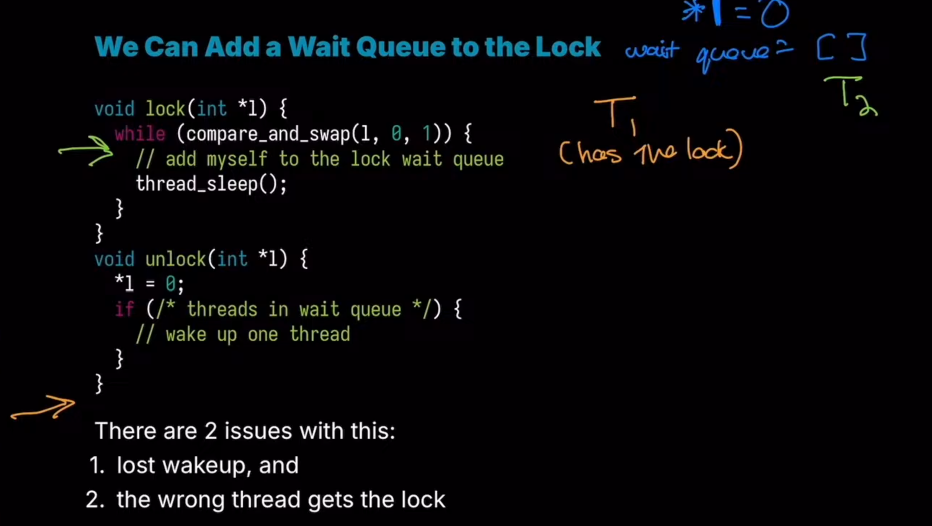

Instead, we return to our basic atomic building blocks. For example, we can use a compare and swap to infinitely loop (inefficient! Should yield instead of loop).

Different lock types include:

- Spinlock

- Read-write locks

Programming

protect linked list with lock

The locking “granularity” is the extent of our locks. Locks incur some overhead (so we shouldn’t have too many locks), may run into contention (too many overlapping threads result in serial execution), and deadlocks.

Implementation-specific

In some languages, mutexes are built-in features, especially in object-oriented languages. We could mark a specific method as “monitored”, and the compiler would insert locks and unlocks for us. In Java, this is the synchronized keyword.

In POSIX systems

We have to properly initialise locks at runtime to guarantee they have correct values. POSIX systems allow us to use different locks to protect different sections (instead of a single big lock used any time critical code is accessed). Static mutexes follow the flow above. Dynamic mutexes are used when we need to modify the default mutex behaviour (using attributes).

Mutex

Link to original

pthread_mutex_init: initialises a mutex. Must be called on a mutex to initialise it to something.

pthread_mutex_t *restrict mutexconst pthread_mutexattr_t *restrict attr: desired attributes. If we want a default mutex, we can passNULL.pthread_mutex_lockpthread_mutex_unlock

In Go

Mutexes are included in the sync library as sync.Mutex. As usual, we have several methods:

mu.Lock()mu.Unlock(). Can be paired with adefer.

Extra notes

basically what’s going on here:

basically what’s going on here:

- doesn’t get to

thread_sleep, but adds - other thread context switches and unlocks

- tries to wake up but nothing wakes

- other thread sleeps

- same idea for wrong thread — if there are multiple threads we could have one context switch before it sleeps