In computer vision, the histogram of oriented gradients (HOG) is a method for extracting image features, primarily for object detection and image classification.

The underlying principle of HOG takes the orientation (horizontal, vertical, oblique) of the image’s gradient, and operates on discrete -pixel wide sub-cells of the image. It creates a 1D histogram for the cell, where the key is a discrete orientation value. Each point where the histogram is a particular bin’s value (i.e., some pixels may have a gradient orientation of ) contains the sum of the gradients at those corresponding pixels.

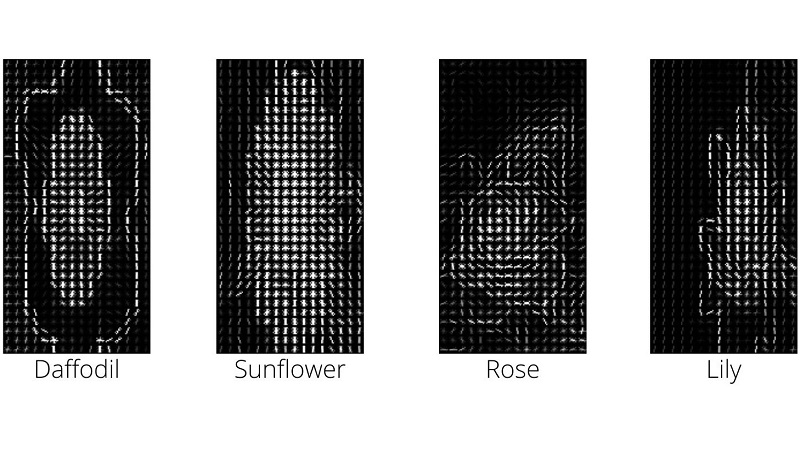

Visually, we can plot each block — the intensity of each block describes how much gradient there is, and the orientation describes the dominant gradient orientation. This looks like an edge detection (in fact, the gradient intensity describes some edges).

In code

A HOG implementation is provided within scikit-image:

from sklearn.feature import hog

features = hog(image, orientations=8,

pixels_per_cell=(8, 8),

cells_per_block=(3, 3),

visualize=False) # image is grayscale