In deep learning, graph neural networks (GNNs) refers broadly to deep learning techniques applied to graph-structured data, as opposed to tabular or grid data.

A popular tool for GNNs is PyTorch Geometric. Additional types of GNNs include graph convolutional networks (GCNs) and graph attention networks (GAT).

A popular tool for GNNs is PyTorch Geometric. Additional types of GNNs include graph convolutional networks (GCNs) and graph attention networks (GAT).

Graphs

One variation introduced with GNNs is that the order of the datasets is unordered, because graphs are inherently unordered. Note that in most RNN and CNN tasks, the order of data does matter (otherwise they lose their meaning).

We can construct a graph by using a transformer without positional encoding. It instead outputs an attention matrix to represent pairwise importance scores, i.e., it creates a fully-connected graph as an adjacency matrix, then learns the edge weights.

Message-passing

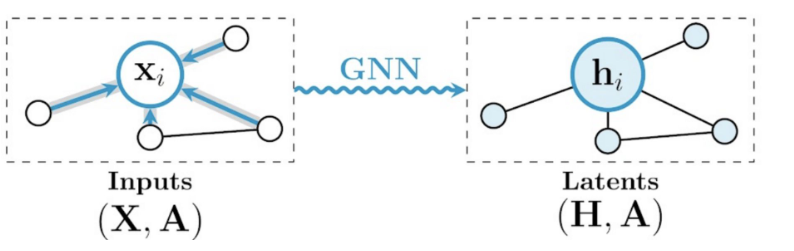

GNNs are based on message-passing, which allows nodes to exchange information with their neighbours. Each node’s representation is iteratively updated by aggregating embeddings of neighbour nodes, which allows the node to capture local structural information. After aggregation, this is combined with the node embedding, then the node embedding is updated. The aggregate function must be order-invariant (similar to deep sets).

Resources

- A Gentle Introduction to Graph Neural Networks by authors at Google Research